|

||||||||

|

| Today's Chemist at Work | E-Mail Us | Electronic Readers Service |

||||||||

|

Introduction As the 1970s opened, new chemistries and the war on cancer seized center stage. U.S. President Richard Nixon (taking a moment of from his pursuit of the Vietnam War) established the National Cancer Program, popularly known as the war on cancer, with an initial half-billion dollars of new funding. Carcinogens were one of the concerns in the controversy surrounding the polluted Love Canal. And cancer was especially prominent in the emotional issue of the “DES daughters”—women at risk for cancer solely because of diethylstilbestrol (DES), the medication prescribed to their mothers during pregnancy. New cancer treatments were developed; chemotherapy joined the ranks of routine treatments, especially for breast cancer. New drugs appeared. Cyclosporin provided a long-sought breakthrough with its ability to prevent immune rejection of tissue grafts and organ transplants. Rifampicin proved its worth for treating tuberculosis; cimetidine (Tagamet), the first histamine blocker, became available for treating peptic ulcers. Throughout the decade, improvements in analytical instrumentation, including high-pressure liquid chromatography (HPLC) and mass spectrometry, made drug purification and analysis easier than ever before. In this period, NMR became transformed into the medical imaging system, MRI. The popular environmental movement that took root in the ideology of the previous decade blossomed politically in 1970 as the first Earth Day was celebrated, the U.S. Clean Air Act was passed, and the U.S. Environmental Protection Agency (EPA) was established. Some of the optimism of the Sixties faded as emerging plagues, such as Lyme and Legionnaires’ disease in the United States and Ebola and Lassa fever in Africa, reopened the book on infectious diseases. The World Health Organization continued its smallpox eradication campaign, but as DDT was gradually withdrawn because of its detrimental effect on the environment, efforts to eradicate malaria and sleeping sickness were imperiled. Ultimately, the 1970s saw the start of another kind of infection—genetic engineering fever—as recombinant DNA chemistry dawned. In 1976, in a move to capitalize on the new discoveries, Genentech Inc. (San Francisco) was founded and became the prototypical entrepreneurial biotech company. The company’s very existence forever transformed the nature of technology investments and the pharmaceutical industry. Cancer

wars

In the 1950s, a major move was made to develop chemotherapies for various cancers. By 1965, the NCI had instituted a program specifically for drug development with participation from the NIH, industry, and universities. The program screened 15,000 new chemicals and natural products each year for potential effectiveness. Still, by the 1970s, there seemed to be a harsh contrast between medical success against infectious diseases and cancer. The 1971 report of the National Panel of Consultants on the Conquest of Cancer (called the Yarborough Commission) formed the basis of the 1971 National Cancer Act signed by President Nixon. The aim of the act was to make “the conquest of cancer a national crusade” with an initial financial boost of $500 million (which was allocated under the direction of the long-standing NCI). The Biomedical Research and Research Training Amendment of 1978 added basic research and prevention to the mandate for the continuing program. Daughters

and sons

DES was prescribed from the early 1940s until 1971 to help prevent certain complications of pregnancy, especially those that led to miscarriages. By the 1960s, DES use was decreasing because of evidence that the drug lacked effectiveness and might indeed have damaging side effects, although no ban or general warning to physicians was issued. According to the University of Pennsylvania Cancer Center (Philadelphia), there are few reliable estimates of the number of women who took DES, although one source estimates that 5–10 million women either took the drug during pregnancy or were exposed to it in utero.

In 1970, a study in the journal Cancer described a rare form of vaginal cancer, clear cell adenocarcinoma (CCAC). The following year, a study in The New England Journal of Medicine documented the association between in utero DES exposure and the development of CCAC. By the end of that year, the FDA issued a drug bulletin warning of potential problems with DES and advised against its use during pregnancy. So-called DES daughters experienced a wide variety of effects including infertility, reproductive tract abnormalities, and increased risks of vaginal cancer. More recently, a number of DES sons were also found to have increased levels of reproductive tract abnormalities. In 1977, inspired by the tragedies caused by giving thalidomide and DES to pregnant women, the FDA recommended against including women of child-bearing potential in the early phases of drug testing except for life-threatening illnesses. The discovery of DES in beef from hormone-treated cattle after the FDA drug warning led in 1979 to what many complained was a long-delayed ban on its use by farmers. The DES issue was one of several that helped focus part of the war against cancer as a fight against environmental carcinogens (see below). Cancer

research/cancer “cures”

Other research developments helped expand knowledge of the mechanics and causes of cancer. In 1978, for example, the cancer suppressor gene P53 was first observed by David Lane at the University of Dundee. By 1979, it was possible to use DNA from malignant cells to transform cultured mouse cells into tumors—creating an entirely new tool for cancer study. Although many treatments for cancer existed at the beginning of the 1970s, few real cures were available. Surgical intervention was the treatment of choice for apparently defined tumors in readily accessible locations. In other cases, surgery was combined with or replaced by chemotherapy and/or radiation therapy. Oncology remained, however, as much an art as a science in terms of actual cures. Too much depended on too many variables for treatments to be uniformly applicable or uniformly beneficial. There were, however, some obvious successes in the 1970s. Donald Pinkel of St. Jude’s Hospital (Memphis) developed the first cure for acute lymphoblastic leukemia, a childhood cancer, by combining chemotherapy with radiotherapy. The advent of allogenic (foreign donor) bone marrow transplants in 1968 made such treatments possible, but the real breakthrough in using powerful radiation and chemotherapy came with the development of autologous marrow transplantation. The method was first used in 1977 to cure patients with lymphoma. Autologous transplantation involves removing and usually cryopreserving a patient’s own marrow and reinfusing that marrow after the administration of high-dosage drug or radiation therapy. Because autologous marrow can contain contaminating tumor cells, a variety of methods have been established to attempt to remove or deactivate them, including antibodies, toxins, and even in vitro chemotherapy. E. Donnall Thomas of the Fred Hutchinson Cancer Research Center (Seattle) was instrumental in developing bone marrow transplants and received the 1990 Nobel Prize in Physiology or Medicine for his work. Although bone marrow transplants were originally used primarily to treat leukemias, by the end of the century, they were used successfully as part of high-dose chemotherapy regimes for Hodgkin’s disease, multiple myeloma, neuroblastoma, testicular cancer, and some breast cancers. In 1975, a WHO survey showed that death rates from breast cancer had not declined since 1900. Radical mastectomy was ineffective in many cases because of late diagnosis and the prevalence of undetected metastases. The search for alternative and supplemental treatments became a high research priority. In 1975, a large cooperative American study demonstrated the benefits of using phenylalanine mustard following surgical removal of the cancerous breast. Combination therapies rapidly proved even more effective; and by 1976, CMF (cyclophosphamide, methotrexate, and 5-fluorouracil) therapy was developed at the Instituto Nazionale Tumori in Milan, Italy. It proved to be a radical improvement over surgery alone and rapidly became the chemotherapy of choice for this disease.

A

new environment

The concept of carcinogens entered the popular consciousness. Ultimately, the combination of government regulations and public fears of toxic pollutants in food, air, and water inspired improved technologies for monitoring extremely small amounts of chemical contaminants. Advances were made in gas chromatography, ion chromatography, and especially the EPA-approved combination of GC/MS. The 1972 Clean Water Act and the Federal Insecticide and Rodenticide Act added impetus to the need for instrumentation and analysis standards. By the 1980s, many of these improvements in analytical instrumentation had profound effects on the scientific capabilities of the pharmaceutical industry. One example is atomic absorption spectroscopy, which in the 1970s made it possible to assay trace metals in foods to the parts-per-billion range. The new power of such technologies enabled nutritional researchers to determine, for the first time, that several trace elements (most usually considered pollutants) were actually necessary to human health. These included tin (1970), vanadium (1971), and nickel (1973). In 1974, the issue of chemical-induced cancer became even broader when F. Sherwood Rowland of the University of California–Irvine and Mario Molina of MIT demonstrated that chlorofluorocarbons (CFCs) such as Freon could erode the UV-absorbing ozone layer. The predicted results were increased skin cancer and cataracts, along with a host of adverse effects on the environment. This research led to a ban of CFCs in aerosol spray cans in the United States. Rowland and Molina shared the 1995 Nobel Prize in Chemistry for their ozone work with Paul Crutzen of the Max Planck Institute for Chemistry (Mainz, Germany). By 1977, asbestos toxicity had become a critical issue. Researchers at the Mount Sinai School of Medicine (New York) discovered that asbestos inhalation could cause cancer after a latency period of 20 years or more. This discovery helped lead to the passage of the Toxic Substances Control Act, which mandated that the EPA inventory the safety of all chemicals marketed in the United States before July 1977 and required manufacturers to provide safety data 90 days before marketing any chemicals produced after that date. Animal testing increased where questions existed, and the issue of chemical carcinogenicity became prominent in the public mind and in the commercial sector. Also in the 1970s, DDT was gradually withdrawn from vector eradication programs around the world because of the growing environmental movement that resulted in an outright ban on the product in the United States in 1971. This created a continuing controversy, especially with regard to who attempts to eliminate malaria and sleeping sickness in the developing world. In many areas, however, the emergence of DDT-resistant insects already pointed to the eventual futility of such efforts. Although DDT was never banned completely except by industrialized nations, its use declined dramatically for these reasons. Recombinant

DNA and more

But more importantly, in 1970, Werner Arber of the Biozentrum der Universität Basel (Switzerland) discovered restriction enzymes. Hamilton O. Smith at Johns Hopkins University (Baltimore) verified Arber’s hypothesis with a purified bacterial restriction enzyme and showed that this enzyme cuts DNA in the middle of a specific symmetrical sequence. Daniel Nathans, also at Johns Hopkins, demonstrated the use of restriction enzymes in the construction of genetic maps. He also developed and applied new methods of using restriction enzymes to solve various problems in genetics. The three scientists shared the 1978 Nobel Prize in Physiology or Medicine for their work in producing the first genetic map (of the SV40 virus). In 1972, rDNA was born when Paul Berg of Stanford University demonstrated the ability to splice together blunt-end fragments of widely disparate sources of DNA. That same year, Stanley Cohen from Stanford and Herbert Boyer from the University of California, San Francisco, met at a Waikiki Beach delicatessen where they discussed ways to combine plasmid isolation with DNA splicing. They had the idea to combine the use of the restriction enzyme EcoR1 (which Boyer had discovered in 1970 and found capable of creating “sticky ends”) with DNA ligase (discovered in the late 1960s) to form engineered plasmids capable of producing foreign proteins in bacteria—the basis for the modern biotechnology industry. By 1973, Cohen and Boyer had produced their first recombinant plasmids. They received a patent on this technology for Stanford and UCSF that would become one of the biggest money-makers in pharmaceutical history. The year 1975 was the year of DNA sequencing. Walter Gilbert and Allan Maxam of Harvard University and Fred Sanger of Cambridge University simultaneously developed different methods for determining the sequence of bases in DNA with relative ease and efficiency. For this accomplishment, Gilbert and Sanger shared the 1980 Nobel Prize in Physiology or Medicine. By 1976, Silicon Valley venture capitalist Robert Swanson teamed up with Herbert Boyer to form Genentech Inc. (short for genetic engineering technology). It was the harbinger of a wild proliferation of biotechnology companies over the next decades. Genentech’s goal of cloning human insulin in Escherichia coli was achieved in 1978, and the technology was licensed to Eli Lilly. In 1977, the first mammalian gene (the rat insulin gene) was cloned into a bacterial plasmid by Axel Ullrich of the Max Planck Institute. In 1978, somatostatin was produced using rDNA techniques. The recombinant DNA era grew from these beginnings and had a major impact on pharmaceutical production and research in the 1980s and 1990s.

High

tech/new mech

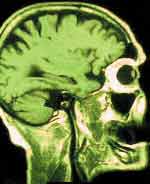

In 1971, the first coaxial tomography scanner was installed in England. By 1972, the first whole-body computed tomography (CT) scanner was marketed by Pfizer. That same year, the Brookhaven Linac Isotope Producer went on line, helping to increase the availability of isotopes for medical purposes and basic research. In 1977, the first use of positron emission tomography (PET) for obtaining brain images was demonstrated. The 1970s saw a revolution in computing with regard to speed, size, and availability. In 1970, Ted Hoff at Intel invented the first microprocessor. In 1975, the first personal computer, the Altair, was put on the market by American inventor Ed Roberts. Also in 1975, William Henry Gates III and Paul Gardner Allen founded Microsoft. And in 1976, the prototype for the first Apple Computer (marketed as the Apple II in 1977) was developed by Stephen Wozniak and Steven Jobs. It signaled the movement of personal computing from the hobbyist to the general public and, more importantly, into pharmaceutical laboratories where scientists used PCs to augment their research instruments. In 1975, Edwin Mellor Southern of the University of Oxford invented a blotting technique for analyzing restriction enzyme digest fragments of DNA separated by electrophoresis. This technique became one of the most powerful technologies for DNA analysis and manipulation and was the conceptual template for the development of northern blotting (for RNA analysis) and western blotting (for proteins). Although HPLC systems had been commercially available from ISCO since 1963, they were not widely used until the 1970s when—under license to Waters Associates and Varian Associates—the demands of biotechnology and clinical practice made such systems seem a vibrant new technology. By 1979, Hewlett-Packard was offering the first microprocessor-controlled HPLC, a technology that represented the move to computerized systems throughout the life sciences and instrumentation in general. Throughout the decade, GC and MS became routine parts of life science research, and the first linkage of LC/MS was offered by Finnigan. These instruments would have increasing impact throughout the rest of the century. (Re)emerging

diseases

There were certainly enough new diseases and “old friends” to control. For example, in 1972, the first cases of recurrent polyarthritis (Lyme disease) were recorded in Old Lyme and Lyme, CT, ultimately resulting in the spread of the tick-borne disease throughout the hemisphere. The rodent-borne arena viruses were identified in the 1960s and shown to be the causes of numerous diseases seen since 1934 in both developed and less-developed countries. Particularly deadly was the newly discovered Lassa fever virus, first identified in Africa in 1969 and responsible for small outbreaks in 1970 and 1972 in Nigeria, Liberia, and Sierra Leone, with a mortality rate of some 36–38%. Then, in 1976, epidemics of a different hemorrhagic fever occurred simultaneously in Zaire and Sudan. Fatalities reached 88% in Zaire (now known as the Democratic Republic of the Congo) and 53% in Sudan, resulting in a total of 430 deaths. Ebola virus, named after a small river in northwest Zaire, was isolated from both epidemics. In 1977, a fatality was attributed to Ebola in a different area of Zaire. The investigation of this death led to the discovery that there were probably two previous fatal cases. A missionary physician contracted the disease in 1972 while conducting an autopsy on a patient thought to have died of yellow fever. In 1979, the original outbreak site in Sudan generated a new episode of Ebola hemorrhagic fever that resulted in 34 cases with 22 fatalities. Investigators were unable to discover the source of the initial infections. The dreaded nature of the disease—the copious bleeding, the pain, and the lack of a cure—sent ripples of concern throughout the world’s medical community. In 1976, the unknown “Legionnaires’ disease” appeared at a convention in Philadelphia, killing 29 American Legion convention attendees. The cause was identified as the newly discovered bacterium Legionella. Also in 1976, a Nobel Prize in Physiology or Medicine was awarded to Baruch Blumberg (originally at the NIH, then at the University of Pennsylvania) for the discovery of a new disease agent in 1963—hepatitis B, for which he helped to develop a blood test in 1971. Nonetheless, one bit of excellent news at the end of the decade made such “minor” outbreaks of new diseases seem trivial in the scope of human history. From 1350 B.C., when the first recorded smallpox epidemic occurred during the Egyptian–Hittite war, to A.D. 180, when a large-scale epidemic killed between 3.5 and 7 million people (coinciding with the first stages of the decline of the Roman Empire), through the millions of Native Americans killed in the 16th century, smallpox was a quintessential scourge. But in 1979, a WHO global commission was able to certify the worldwide eradication of smallpox, achieved by a combination of quarantines and vaccination. The last known natural case of the disease occurred in 1977 in Somalia. Government stocks of the virus remain a biological warfare threat, but the achievement may still, perhaps, be considered the most unique event in the Pharmaceutical Century—the disease-control equivalent of landing a man on the moon. By 1982, vaccine production ceased. By the 1990s, a controversy arose between those who wanted to maintain stocks in laboratories for medical and genetic research purposes (and possibly as protection against clandestine biowarfare) and those who hoped to destroy the virus forever. On a lesser but still important note, the first leprosy vaccine using the nine-banded armadillo as a source was developed in 1979 by British physician Richard Rees at the National Institute for Medical Research (Mill Hill, London). Toward

a healthier world

In 1974, the WHO launched an ambitious Expanded Program on Immunization to protect children from polio myelitis, measles, diphtheria, whooping cough, tetanus, and tuberculosis. Throughout the 1970s and the rest of the century, the role of DDT in vector control continued to be a controversial issue, especially for the eradication or control of malaria and sleeping sickness. The WHO would prove to be an inconsistent ally of environmental groups that urged a ban of the pesticide. The organization’s recommendations deemphasized the use of the compound at the same time that its reports emphasized its profound effectiveness. Of direct interest to the world pharmaceutical industry, in 1977 the WHO published the first Model List of Essential Drugs—“208 individual drugs which could together provide safe, effective treatment for the majority of communicable and noncommunicable diseases.” This formed the basis of a global movement toward improved health provision as individual nations adapted and adopted this list of drugs as part of a program for obtaining these universal pharmacological desiderata.

That

’70s showdown

Medical marvels and new technologies promised excitement at the same time that they revealed more problems to be solved. A new wave of computing arose in Silicon Valley. Oak Ridge National Laboratory detected a single atom for the first time—one atom of cesium in the presence of 1019 argon atoms and 1018 methane molecules—using lasers. And biotechnology was born as both a research program and a big business. The environmental movement contributed not only a new awareness of the dangers of carcinogens, but a demand for more and better analytical instruments capable of extending the range of chemical monitoring. These would make their way into the biomedical field with the demands of the new biotechnologies. The pharmaceutical industry would enter the 1980s with one eye on its pocketbook, to be sure, feeling harried by economics and a host of new regulations, both safety and environmental. But the other eye looked to a world of unimagined possibilities transformed by the new DNA chemistries and by new technologies for analysis and computation. |

||||||||

|

© 2000 American Chemical Society

|

||||||||